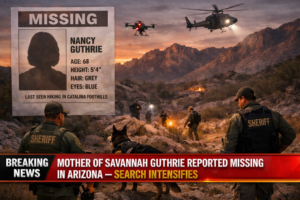

Experts Warn AI Deepfakes Are Fueling a Collapse of Trust Online — Venezuela Misinformation Case Highlights Crisis

WASHINGTON, D.C. — As artificial intelligence continues its rapid evolution, experts are raising alarms that AI-generated deepfakes and manipulated digital content are undermining the very foundation of trust online — threatening public confidence in news, social media, and digital communication. The warning comes into sharp focus after a wave of AI-driven misinformation linked to the recent U.S.-Venezuela conflict flooded social networks, confusing global audiences and illustrating how easily truth can be blurred in the digital age.Right at the heart of this digital upheaval was the sudden spread of deepfake images and videos purportedly showing Venezuelan President Nicolás Maduro’s capture and widespread celebrations in Caracas. Within minutes of initial posts, these visuals racked up millions of views across platforms including X, Instagram, TikTok, and Facebook — despite being verified as fake or misleading by fact-checking organizations.

A Digital Mirage: How AI Content Flooded Social Media

In the midst of breaking news coverage of military operations involving Venezuela and the United States, users on social media were bombarded with AI-generated clips and photos that seemed to show dramatic developments on the ground. Some visuals depicted Maduro being escorted by U.S. forces or jubilant crowds flooding the streets — scenarios that fact-checkers later debunked or traced to unrelated, older footage. Several pieces of content even recycled images from events like a college run at UCLA, misrepresented as on-the-ground scenes in Caracas.One AI-generated image appearing to depict Maduro alongside foreign agents turned out to be entirely synthetic, while other posts drew from footage of unrelated U.S. military exercises. This mix of real, repurposed, and fabricated content made it extraordinarily difficult for ordinary users — and even some seasoned social media platforms — to distinguish authentic reporting from deception.

Trust Erosion: Experts Sound the Alarm

Digital security specialists and communication researchers point to this incident as a larger manifestation of a trend that has deep roots. According to specialists, the most fundamental challenge posed by deepfakes isn’t simply the spread of falsehoods, but the erosion of baseline trust in digital information.

“At a time when people rely on digital content for crucial information, seeing manipulated visuals during major global events risks permanently weakening trust,” said Jeff Hancock, founding director of the Stanford Social Media Lab, in a recent interview. Hancock explains that misinformation created by AI systems capable of generating highly realistic content erodes instinctive trust — the default belief that online communication is authentic until proven otherwise.This problem is compounded when misinformation circulates faster than platforms can flag or remove it. As deepfakes improve in quality and spread more widely, users may soon face a persistent skepticism about everything they see online — from breaking news to personal messages. This digital distrust has far-reaching social implications, extending beyond public discourse to democratic processes, financial systems, and interpersonal relationships.

The “Liar’s Dividend”: A New Weapon in Information Warfare

Analysts also caution about a concept known as the “liar’s dividend”, in which political actors exploit uncertainty about the authenticity of information. When AI can fabricate plausible evidence, public figures may dismiss real evidence by claiming it’s AI-generated, avoiding accountability and deepening confusion. This tactic has long been studied in political science and has become more potent with advanced generative AI tools.

The digital environment is now fertile ground for such strategies — where the existence of deepfakes alone can be used to undercut factual reporting. In this context, public trust doesn’t just diminish because people see fake content; it collapses because everyone begins to doubt everything until verified independently.

From Political Turmoil to Personal Fraud

The issues stretch far beyond geopolitics and news reporting. Deepfakes have been used in personal scams, financial fraud, and social engineering attacks. According to industry reports, the global financial losses related to AI-generated fraud and identity manipulation could rise into the tens of billions of dollars in the coming years.Cybersecurity experts frequently cite scenarios where deepfake audio is used to impersonate corporate executives to authorize fraudulent fund transfers, or where convincing fake videos trick employees into believing they were acting on legitimate directives. These scenarios illustrate how deepfake misuse is reshaping digital crime and dramatically lowering defenses that once depended on trust in human voices and images.

Platforms’ Struggle to Keep Up

Major social media platforms like X, Facebook, and TikTok have scaled back content moderation in recent years, exacerbating the problem. With fewer human moderators reviewing content, AI-generated misinformation can spread unchecked until after it has already gone viral. Meanwhile, AI watermarking and detection tools such as Google’s SynthID technology provide some safeguards but are far from perfect against rapidly improving generative models.

Some platforms are experimenting with context panels, community fact-checks, and algorithm tweaks to limit deepfake impact. However, without broader regulatory frameworks and international coordination, these measures may not be enough to stop the tidal wave of misinformation.

What the Public Can Do

Experts emphasize that combating the collapse of trust will require educating the public about digital verification, promoting AI literacy, and encouraging healthy skepticism. Users are urged to pause and verify sources before sharing content, rely on established news outlets for confirmation, and use AI detection tools when possible.

At the same time, lawmakers and regulators are under increasing pressure to develop robust policies that hold creators and disseminators of harmful AI content accountable, as well as requiring platforms to adopt stronger verification systems.

Looking Ahead

The era of deepfake technology is not retreating; it is advancing. Its implications will continue to ripple across news media, politics, cybersecurity, and personal communication. If unchecked, this wave of AI-generated misinformation may fundamentally reshape our perception of reality itself — blurring the line between fact and fiction.

As users witness misinformation in high-stakes contexts like international conflict, elections, and economic crises, the question becomes less about whether deepfakes are dangerous, and more about how societies can rebuild trust in a world where seeing isn’t always believing.